Looking for suitable tools for querying and calculating large files

In data analysis, it is inevitable to encounter large data files that cannot be loaded in memory. How to query and calculate large files? This paper analyzes and compares the advantages and disadvantages of several common methods, esProc SPL is the most suitable script for data analysts to process large files.Go to Looking for suitable tools for querying and calculating large files for more infomation.

What is a large file? A large file is a file that is too large to be read in at one time because of insufficient computer memory. In this case, direct use of desktop data tools (such as Excel) is powerless, often need to write a program to deal with it. Even if the program is written, a large file must be read in batches for calculation and processing. Finally, the batch processing results need to be properly summarized according to different calculation types, which is much more complicated than the processing of small file. There are many types of large files, such as text files, Excel files, XML files, JSON files, HTTP files. Among them, text (txt or CSV) is the most common.

The program languages that can be used to process large files are as follows:

1. Conventional high-level programming languages, such as Java, C/C++, C#, Basic, etc.

2. The file data is imported into the database and processed by SQL

3. Python

4. esProc SPL

Taking text file as an example, this paper introduces the characteristics of the above methods for large file calculation in turn. For other types of file, except the way of reading data is different, the processing idea after reading is similar to text file.

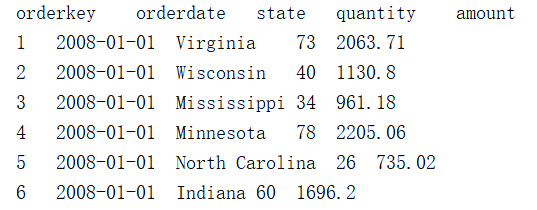

The file to be used in this paper orders.txt has five columns: orderkey, orderdate, state, quantity and amount. The column is separated by tab. The first line in the file is the column name, with a total of 10 million lines of data. As follows:

I. High-level languages(Take Java as an example)

Using high-level language programming to calculate, how to write the calculation process is related to the specific calculation type. Different types of calculation need different calculation process. Let's take a look at the simplest aggregate calculation, such as calculating the sum of amount in orders.txt. It is written in Java as follows:

BufferedReader br = new BufferedReader(new InputStreamReader( new FileInputStream("orders.txt") ) );

String[] fieldNames = br.readLine().split("\t");

double sumAmount = 0;

String line = null;

while(( line = br.readLine() )!= null) {

String[] values = line.split("\t");

float amount = Float.parseFloat(values[4] ); // Assume that column 5 is known as amount

sumAmount += amount;

}

This program is to read a line of data at a time, the file access takes too much time and the running is slow. If you want to run faster, you need to read in a large block of data (such as 10000 lines) at a time, and then use the code to split the data into lines for processing, which will be very troublesome.

This is the simplest calculation, with no data filtering, grouping, sorting and other requirements. Other calculations for the average, maximum and minimum are similar to this.

If you want to do group aggregation, the code will be much more troublesome. For example, after grouping by state, calculate the total order amount of each state. The calculation idea is as follows: save each group, and then read the state value of each row line by line. Compare with the saved group, if found, add the order amount of this line to the group; if not, add a new group; until all lines are processed. If multiple statistical values need to be calculated by grouping multiple fields, such as total order amount and maximum order amount by date and state grouping, the complexity of the program will increase a lot.

Sorting is more cumbersome and requires intermediate cache files. For example, if you want to sort the order amount from large to small, you can't read all the data to sort because of insufficient memory. The calculation idea is as follows: read 5000 lines of data (the number of lines to be read depends on the memory), sort them and save them to a temporary file, and then read 5000 lines to sort and save them to another temporary file until all the data is processed. Finally these temporary files are merged in an orderly manner -- read out the first line of each temporary file, find out which line should be in the top, write it to the result file, and then read another line from the temporary file, continue to compare and find the first line to write to the result file. Do this until all data lines are written to the result file.

It is very tedious to process large files with high-level language, which is almost impossible for none professional programmers.

II. Using database

Many calculation algorithms are built into the database, and the query and calculation of data is relatively perfect, and the performance is also relatively good. Therefore, it can be considered to import the large file into the database, generate the database table, and then use SQL to query and calculate the data.

The trouble with this method is to import the file into the database. Before importing the data, you need to create the table structure and specify the data type of each column. For example, the SQL for creating the order table is as follows:

CREATE TABLE orders ( orderkey INTEGER NOT NULL,

orderdate DATE NOT NULL,

state CHAR(20) NOT NULL,

quantity INTEGER NOT NULL,

amount DECIMAL(15,2) NOT NULL,

PRIMARY KEY(orderkey)

);

If you change to another data file with other structure, you need to write another SQL to create the table. In particular, you need to specify the data type, otherwise the database will not be able to accept the data, which is very unfamiliar to many none professional programmers.

For the import process, the database generally provides tools to import text files directly, while other files cannot be imported directly, and they need to be converted into text files first. Excel files can be directly saved as text, but for XML files, JSON files, HTTP files, etc., it is necessary to write programs to convert them into text files, or to write programs to read in the file, generate an SQL statement, and write the data to the database table. No matter what, it is a very tedious thing.

After the data is stored in the database table, query and calculation are really very simple, grouping and sorting are also very easy. Examples are as follows:

1. Calculate total order amount

select sum(amount) from orders;

2. Group by state and calculate total order amount by state

select state, sum(amount) from orders group by state;

3. Sort by order amount

select * from orders order by amount;

It is very convenient to query and calculate large amount of data by using database, but it is very complicated to import large files into database, and there are certain professional skills requirements. Compared with high-level language, the feasibility is greatly improved, but it is still not good enough.

III. Python

Python does not provide syntax for processing large files directly. Its implementation idea is similar to that of high-level language. For example, the sum of order amount calculated in the previous section is written as follows:

sumAmount=0

with open("orders.txt",'r') as f:

while True:

line = f.readline()

if not line:

break

sumAmount += float(line.split("\t")[4])

print(sumAmount)

For complex operations such as grouping and sorting, if we implement the idea mentioned above, Python is also very troublesome, which is not much simpler than Java. But Python has a pandas package that encapsulates many structured data processing functions. If it is a small file that can be read into memory, it can be handled easily. Unfortunately, pandas doesn't provide a direct batch processing method for large files, so we have to write programs by ourselves. The complexity of using pandas is much less than that of direct hard writing, but the ideas discussed above still need to be implemented.

It's too troublesome to write the grouping operation. Let's write the summation operation based on pandas to feel it.

import pandas as pd

chunk_data = pd.read_csv("orders.txt",sep="\t",header=None,chunksize=100000)

sumAmount=0

for chunk in chunk_data:

sumAmount+=chunk[4].sum()

print(sumAmount)

After using pandas, the text can be regarded as structured data row by row, and it is no longer necessary to split it by yourself.

Python does not have a big problem with small files, but it does not provide effective support for large files. Compared with the high-level language, the reduced workload is very limited, the usability is not high, and it is not as good as the database.

In addition, Python is an interpreted execution language, and its execution speed is much lower than that of high-level language, it is more obvious when processing large files.

IV. esProc SPL

esProc SPL is a professional open-source data processing tool. Like the database, it has built-in query and calculation algorithms. It can directly use files such as text, Excel, XML, JSON, etc.to calculate, without the process of importing data.

SPL provides a cursor, which can read data in batches and then calculate, so it is convenient to process large files. As in the previous example, you only need one line of code:

1. Calculate total order amount

=file("orders.txt").cursor@t().total(sum(amount))

It is also very easy if you want to add a filtering. For example, you can only count the total order amount since 2009:

=file("orders.txt").cursor@t().select(orderdate>=date("2009-01-01")).total(sum(amount))

Grouping and sorting are also simple:

2. Group by state and calculate total order amount by state

=file("orders.txt").cursor@t().groups(state;sum(amount))

3. Sort by order amount

=file("orders.txt").cursor@t().sortx(amount)

SPL even allows you to query files directly using SQL. For example, the previous three examples are written as follows:

$select sum(amount) from "orders.txt"

$select state, sum(amount) from "orders.txt" group by state

$select * from "orders.txt" order by amount

SPL also has built-in parallel computing, and can make full use of multi-core CPU to improve performance, which is particularly useful for large files. For example, group aggregate calculation is written as follows:

=file("orders.txt").cursor@tm(;4).groups(state;sum(amount))

It will be calculated in 4-way parallel mode, and the speed can be increased by 2-3 times on ordinary multi-core laptop. Relatively speaking, high-level languages can achieve parallelism, but it is not only troublesome, but also only professional programmers can do it. Python basically can not implement parallel. SQL depends on the database. It’s Ok to implement parallel for professional database such as Oracle, but not for simple database such as MySQL.

esProc SPL has the advantages of SQL and avoids the trouble of data import. For desktop data analysts, it is the most appropriate tool for processing large files.

SPL Official Website 👉 https://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProcSPL

SPL Learning Material 👉 https://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/2bkGwqTj

Youtube 👉 https://www.youtube.com/@esProc_SPL