How Reporting Tools Access Hadoop Data

A Hadoop ecosystem consists of the core application HDFS and peripheral applications, including Hive, HBase, Spark, and others. Hive is the easiest to access by the reporting tool because it provides official JDBC driver. Other applications are much more difficult to access because they offer API interface only. Most reporting tools can only invoke the API interface using a user-defined data set, and the code is extremely complicated. A few encapsulate API interface to make data retrieval simple, but the computing process is complex still and a lot of hardcoding work is needed.

A better choice is the open-source, Java-based class library SPL. SPL encapsulates the API interface as a simple and easy-to-use retrieval function that has uniform syntax, making it convenient to access both HDFS and a lot of peripheral applications. It has great computational capabilities and the JDBC driver is easy to be integrated by the reporting tool.

SPL offers convenient and easy-to-use retrieval functions that can retrieve data from various Hadoop data sources. For instance, HDSF stores structured files of many types and we are trying to retrieve data from them:

A |

B |

|

1 |

=hdfs_open(;"hdfs://192.168.0.8:9000") |

|

2 |

=hdfs_file(A1,"/user/Orders.txt":"UTF-8").import@() |

// Import as tab-separated txt |

3 |

=hdfs_file(A1,"/user/Employees.csv":"UTF-8").import@tc() |

// Import as a csv where the first row contains column names |

4 |

=hdfs_file(A1,"/data.xls":"GBK").xlsimport@t(;"sheet3") |

// Import as an xls and retrieve data from sheet3 |

5 |

=hdfs_close(A1) |

|

6 |

return A2 |

//Return A2 only |

SPL can also retrieve data from files with non-standard formats and from multilevel JSON or XML.

Retrieve data from HBase:

A |

|

1 |

=hbase_open("hdfs://192.168.0.8", "192.168.0.8") |

2 |

=hbase_scan(A1,"Orders") |

3 |

=hbase_close(A1) |

4 |

return A2 |

Retrieve data from Spark:

A |

|

1 |

=spark_client("hdfs://192.168.0.8:9000","thrift://192.168.0.8:9083","aa") |

2 |

=spark_query(A1,"select * from tablename") |

3 |

=spark_close(A1) |

4 |

return A2 |

Hive supplies both JDBC and API interfaces. The latter is higher in performance. SPL supports JDBC interface and encapsulates API interface. Their uses are as follows:

A |

|

1 |

=hive_client("hdfs://192.168.0.8:9000","thrift://192.168.0.8:9083","hive","asus") |

2 |

=hive_query(A1,"select * from table") |

3 |

=hive_close() |

4 |

return A2 |

SPL has a wealth of built-in functions so that it can retrieve data using simple and intuitive syntax, without the need of any complicated hardcoding. To perform a query on orders data according to interval-style conditions, for instance, we have the following SPL code, where p_start and p_end are parameters:

A |

|

1 |

=hdfs_open(;"hdfs://192.168.0.8:9000") |

2 |

=hdfs_file(A1,"/user/Orders.txt":"UTF-8").import@() |

3 |

=hdfs_close(A1) |

4 |

=A2.select(Amount>p_start && Amount<=p_end) |

SPL data retrieval processes are highly similar, and SPL syntax is universally applied. So, no detail will be explained separately about them.

As code written in an RDB, SPL code can be integrated into a reporting tool through its JDBC driver. Take the above SPL code as an example, we can first save it as a script file and then invoke its name in the reporting tool as we do with a stored procedure. Assume we are using Birt and the code can be like this:

{call intervalQuery(? , ?)}

More examples:

A |

B |

|

3 |

… |

|

4 |

=A2.select(Amount>1000 && like(Client,\"*S*\")) |

//Fuzzy query |

5 |

=A2.sort(Client,-Amount)" |

//Sort |

6 |

=A2.id(Client) |

//Distinct |

7 |

=A2.groups(year(OrderDate);sum(Amount)) |

//Grouping & aggregation |

8 |

=join(A2:O,SellerId;B2:E,EId) |

/Join |

SPL also supports standard SQL syntax in order to help beginners adapt. The syntax is suitable for handling computing scenarios involving short and simple code. The above query based on interval-style conditions can be rewritten as the following SQL statement:

A |

|

1 |

=hdfs_open(;"hdfs://192.168.0.8:9000") |

2 |

=hdfs_file(A1,"/user/Orders.txt":"UTF-8").import@() |

3 |

=hdfs_close(A1) |

4 |

$select * from {A2} where Amount>? && Amount<=?; p_start, p_end |

More examples can be found in Examples of SQL Queries on Files.

SPL’s most prominent feature is its outstanding computational capabilities, which simplify computations with complex logic, including stepwise computations, order-based computations and post-grouping computations. SPL can deal with many computations that are hard to handle in SQL effortlessly. Here is an example. We are trying to find the first n big customers whose sales amount occupies at least half of the total and sort them by amount in descending order.

A |

B |

|

1 |

… |

/Retrieve data |

2 |

=A1.sort(amount:-1) |

/ Sort data by amount in descending order |

3 |

=A2.cumulate(amount) |

/ Get the sequence of cumulative totals |

4 |

=A3.m(-1)/2 |

/ The last cumulative total is the final total |

5 |

=A3.pselect(~>=A4) |

/ Get position of record where the cumulative total reaches at least half of the total |

6 |

=A2(to(A5)) |

/ Get values by position |

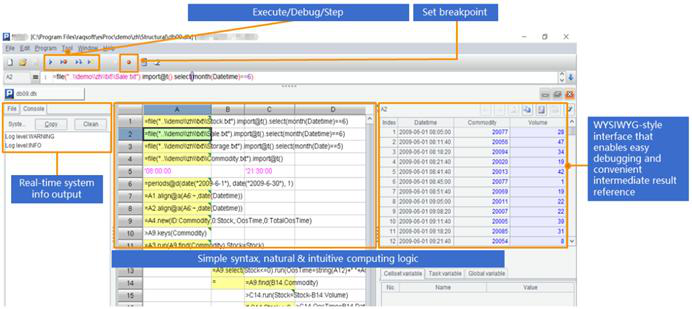

SPL has a professional IDE equipped with all-around debugging functionalities and letting users to observe the result of each step with grid-style coding, making it particularly suited to implementing algorithms with complex logic.

SPL also supports many more data sources, including WebService, RESTful, MongoDB, Redis, ElasticSearch, SalesForce, Cassandra and Kafka, and the mixed computations between any types of data sources or databases.

SPL Official Website 👉 https://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProcSPL

SPL Learning Material 👉 https://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/2bkGwqTj

Youtube 👉 https://www.youtube.com/@esProc_SPL

Chinese version