Performance Optimization - 4.3 [Traversal technology] Parallel traversal

Performance Optimization - 4.2 [Traversal technology] Multipurpose traversal

We discussed the method of segmenting external storage data set in Chapter 2, which can be used not only for binary search, but more importantly, for segmented parallel computing. By distributing the computation load to multiple CPUs, it can often implement a performance improvement close to linear growth.

SPL provides a convenient parallel computing syntax:

| A | B | |

|---|---|---|

| 1 | =file(“orders.txt”) | |

| 2 | fork to(4) | =A1.cursor@t(area,amount;A2:4) |

| 3 | return B2.groups(area;sum(amount):amount) | |

| 4 | =A2.conj().groups(area;sum(amount)) | |

The fork statement will start multiple threads to execute their own code blocks in parallel. The number of threads is determined by the length of the parameter sequence after fork. fork will simultaneously assign these parameters to each thread in turn, and they can be obtained by referencing the value of the cell where fork is located in the code block. When all threads are executed, the calculation result of each thread (the value of return in code block) will be collected into the cell where fork is located, and assembled into a sequence in the order of threads. Then the code continues to execute.

In this example, A2 will generate four threads, and each thread will get 1,2,3 and 4 as parameter respectively. B2 will generate a corresponding segment cursor to perform grouping and aggregating calculation. After all the four threads are executed, each thread’s return value (a table sequence) will be collected into the fork cell (i.e., A2), and then these table sequences will be concatenated and aggregated again to obtain the grouping and aggregating result for original data table.

In addition to segmenting data table, fork can also be used for the parallel computing of multiple files. For example, some orders are stored in 12 files, one file per month. Now we want to calculate the number of orders with an amount over 50 in each region:

| A | B | |

|---|---|---|

| 1 | fork to(12) | =file(“orders”\A2\“.txt”) |

| 2 | =B1.cursor@t(area,amount;A2:4) | |

| 3 | =B2.select(amount>=50) | |

| 4 | return B2.groups(area;count(1):quantity) | |

| 5 | =A1.conj().groups(area;sum(quantity)) | |

It will start 12 threads to calculate. Note that after counting with count()inside the thread, you need to use sum() to sum outside the thread, instead of using count() again.

SPL simplifies the mechanism of parallel computing. It will assume all threads are started at the same time, and that the whole task is completed only when all threads are executed. Although it cannot be used to handle system-level complex parallel tasks, the syntax and code are much simpler and enough for most structured data computations.

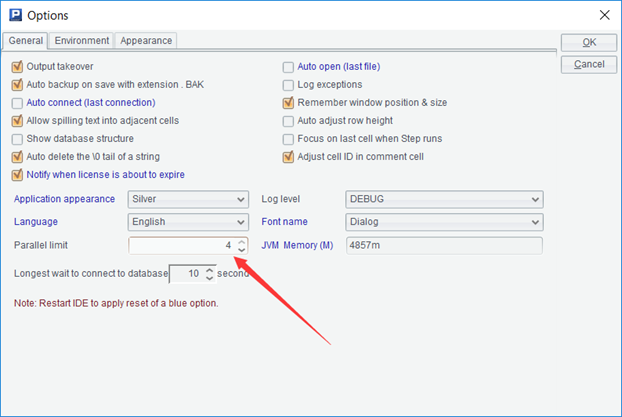

When executing in parallel, you need to first set the parallel limit in esProc options:

After setting, SPL will generate at most the set number of threads physically. If the parameter sequence after fork is longer, it will be forced to execute serially. For example, the length of parameter sequence of fork in the previous example is 12, while the parallel limit here is set as 4, SPL will actually start only 4 threads and put the first four tasks of fork into the 4 threads in turn to execute. When a thread becomes idle after execution, put a task that has not been executed into the thread, and repeat this process until all tasks are executed. In this way, there seems to be 12 threads logically, but in reality, there are only 4 threads.

Under this mechanism, it would be beneficial to split a task into more parts. Because the actual execution time of each task is not always the same, if each thread physically executes only one task, then the fast-executing thread has to wait for other slow-executing threads after it executes its task. If a task is split into more parts, the fast thread can execute more tasks, which will reduce the total wait time, make the load more balanced and improve the overall performance. However, the disadvantage of splitting task into more parts is that it will occupy more memory to store the results returned by threads, and will require some cost to do thread switching and scheduling, so it is not the case that the more parts the task is split, the better performance you will get. An appropriate number of splits needs to be determined based on actual conditions.

Usually, each thread will correspond to one CPU for execution. If the number of threads generated exceeds the number of CPUs, the operating system will use time-sharing technology to switch different threads on the CPUs. These mechanisms will consume CPU and memory resources, resulting in a decrease in overall performance. Therefore, when setting the parallel limit, it should set a value that doesn’t exceed the number of CPUs (slightly less is better), because some CPUs also need to handle other tasks such as operating system processes.

Some servers have a lot of CPUs, possibly up to dozens. However, sometimes we find that setting a larger parallel limit doesn’t necessarily achieve better performance. One reason is that more threads will lead to an increase in scheduling cost, and a more likely reason is that the hard disk does not have the same parallel capability. Multiple threads will share the same set of hard disks, and when external storage computation is involved, data also needs to be read from hard disks. However, the total throughput capacity of hard disk is fixed, if its capacity limit is reached, there is no way to run faster, resulting in the phenomenon that CPU is idle, waiting for the hard disk. Especially for mechanical hard disk, parallel reading will cause serious head jumping, resulting in a significant decrease in hard disk performance, and it is highly likely that the more threads there are, the worse the performance will be.

The CPU and hard disk configuration of a server should be balanced to adapt to computing tasks. Some servers have a lot of powerful CPUs, but they are equipped with low-speed mechanical hard drives for large storage, which often fails to fully utilize the performance of CPUs, resulting in waste. If the money spent on CPUs is spent on memory or high-speed SSDs, it is likely to get better performance at a lower cost.

Performance Optimization - 4.4 [Traversal technology] Load from database in parallel

Performance Optimization - Preface

SPL Official Website 👉 https://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProcSPL

SPL Learning Material 👉 https://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/2bkGwqTj

Youtube 👉 https://www.youtube.com/@esProc_SPL