How far is SPL from vector database?

ChatGPT has made Large Language Model (LLM) popular, and also made vector database popular. The training cost of LLM is too high and the cycle to learn new knowledge for LLM is too long; vector database can act as the “memory” module of LLM, and can find old problems similar to new problems and hand them over to LLM for processing, significantly expanding the application scope of LLM. In fact, vector database has already been utilized in traditional AI and machine learning scenarios, such as face recognition, image search, and voice recognition.

The main task of vector database is to search for the vector similar to the target vector from a massive amount of vector data. Logically, such a task is not difficult to implement, because it is merely a TopN operation, which is easy to code in SQL of traditional relational database. However, due to the inability to define an order on high-dimensional vectors, it is impossible to create an index in advance, and the hard traversing has to be adopted in traditional databases, resulting in a tremendous amount of computation and poor performance. In contrast, vector database is specially optimized for this problem by providing some efficient methods for finding similar vectors, which substantially improves performance.

The vector database is seemly a kind of special-purpose database that can implement vector search after loading the vector data, after all, it is the way we use relational database - load the structured data and then implement query in SQL, which usually doesn’t need to care about how SQL is parsed and optimized by database.

However, vector database is not that simple; the process of implementing vector search in vector database is complex and scenario specific, which can be seen in the following aspects:

1. Vector database usually does not provide a syntax as simple and easy to use as SQL syntax of relational database, and only provides APIs of basic algorithms; programmers need to resort to relatively basic programming languages (such as C/C++, Java; some slightly advanced languages will provide Python interface) to call such APIs to implement vector search themselves. And, none of these languages except Python provides mature and popular vector data type, resulting in the inconvenience of developing vector-related calculations. Even Python, which provides vector data types, there are still many difficulties in implementing vector-related calculations due to its limited abilities in processing big data and parallel computing. In contrast, such problems don’t exist in relational database, as we can query data directly in SQL, and it usually does not need to rely on the computing abilities of basic programming languages.

2. Vector-related calculation requires data preprocessing based on specific scenarios, without which subsequent operations will lose their meaning. For example, floating-point-number vectors require normalization, dimensionality reduction, transformation, etc.; binary vectors require data conversion, dimension sorting and selection, etc. Even for data of the same format, the preprocessing methods may vary. For example, the text vector may require normal dimensionality reduction only, while the image data may also require convolution. Not only are such preprocessing methods numerous, but there is a need to adjust many parameters and, the parameters are often related to specific data, so these methods depend highly on specific scenarios. General vector databases do not provide such preprocessing methods, and even if they do, there are only a few fixed methods, which cannot meet specific needs. As a result, we have to resort to other programming languages or introduce third-party technologies to preprocess data. In contrast, processing structured data of relational database is much simpler, and it usually does not need to preprocess; even if it does, it is very simple, only involving encoding and data type conversion, etc., and SQL itself has such ability.

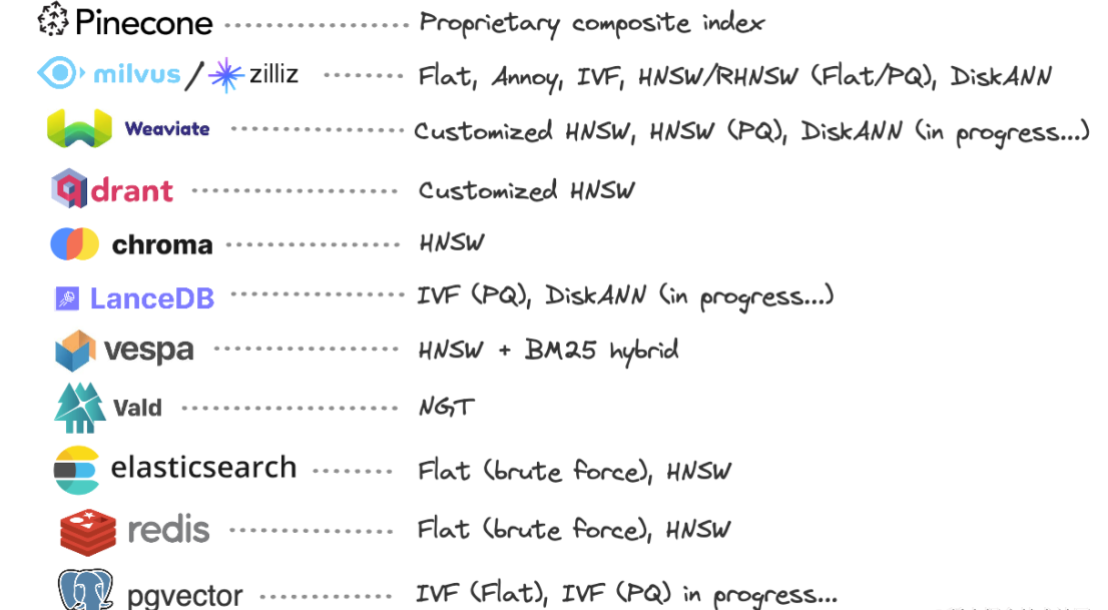

3. There is a need to choose an algorithm for creating index or even to choose a vector database. The key of efficient vector search is to have an index that adapts to the characteristics of data distribution. However, there are too many index creation algorithms, such as k-means clustering, IVF-PQ, Faiss, HNSW, LSH. Understanding these algorithms alone requires a lot of mathematical knowledge and, usually, only after repeatedly adjusting the parameters can a clustering algorithm with high search efficiency and high accuracy be obtained (take the parameter k of k-means as an example, it is almost impossible for developers who have little knowledge on data or lack experience to set a suitable k value). What’s worse, a vector database usually only provides a few index algorithms, yet such algorithms are not universally applicable, and need to be chosen based on the characteristics of data distribution. If a vector database is chosen arbitrarily, and the index is found unsuitable for the data of the database after a long time of algorithm parameter adjustment (the search efficiency or accuracy cannot meet requirements), then changing the database may be a disaster. The figure below shows the index creation methods of several top-ranked vector databases:

4. There is no an established method to evaluate similarity. Vector databases provides several commonly used methods. For example, Pinecone, the most popular vector database at present, only provides Euclidean distance, cosine similarity and dot product similarity. However, the method to evaluate similarity needs to be defined based on the application scenario and data characteristics. Other commonly used methods include Pearson correlation coefficient, XOR distance, etc. Each method has different application scenario, and the corresponding index creation method may also be different. For example, the floating-point-number vectors usually adopt the Euclidean distance or cosine similarity to evaluate similarity, and uses the HNSW algorithm to create index, while the binary vector may need to adopt the XOR distance to evaluate similarity, and uses the IVF algorithm to create index. Since the choice of similarity evaluation method directly affects the efficiency and accuracy of vector search, it usually needs to repeatedly combine the index creation algorithms and the similarity evaluation methods, and adjust parameters until an ideal query effect is achieved (similar to creating an index, and sometimes it needs to try another vector database).

When conducting a performance test in relational database, we usually only need to randomly generate a sufficient number of data, and at most, we only need to consider the data types and value ranges of fields. However, this does not apply for vector calculations, for the reason that the space of high-dimensional vectors is too large, and completely randomly generated data are hardly to form as clusters; if the clustering method is used to create index, the data amount of each cluster will not be large, making it difficult to reduce the amount of traversal and, as a result, the search efficiency cannot be improved. In practice, vector data (such as fingerprint data, face data) tends to gather at certain positions in high-dimensional space, making it easy to create effective index to ensure the efficiency and accuracy of querying similar vectors but, the performance test can only be performed after obtaining actual data, which also indirectly illustrates the fact that vector-related calculation is scenario specific.

From this perspective, the vector database is completely different from the relational database in that it is not a ready-to-use product, and involves too much scenario-related jobs and tests when performing a task, and is more like a programming practice. Therefore, a vector database is more like an implementation project rather than a directly usable product.

Since it is a programming job based on basic library, we just need to choose a programming language that is easy to develop and provides related algorithm libraries, and there isn’t much need to specifically procure a vector database.

SPL is exactly such a programming language.

Compared to the host languages (such as C++, Python) commonly used in vector database, SPL has significant advantages in program logic, programming, and data storage, etc. As long as enough basic libraries are available, it will be more convenient to implement such vector search tasks.

1. Vector-related calculation and preprocessing

SPL itself provides rich and flexible vector-related calculation methods and preprocessing methods, allowing us to quickly perform operations between vectors.

For example, calculate the product of vectors:

A=[[1,2],[2,3],[3,4]]

B=[[1],[2]]

M=mul(A,B)

Euclidean distance between vectors:

A1=10.(rand())

A2=10.(rand())

D= dis(A1, A2)

Cosine similarity after vector normalization:

C=(A1** A2).sum()

PCA dimensionality reduction:

A=[[1,2,3,4],[2,3,1,2],[1,1,1,-1],[1,0,-2,-6]]

A’=pca(A,2)

2. Program logic that matches vector data type

The basic algorithms of current vector databases usually require host programming languages (such as C++, Python) to conduct, resulting in low development efficiency. SPL has complete program logic, which is integrated with vector data type and basic algorithms, making development more efficient. In contrast, C++ and Java have no vector data type in the strict sense, and only offer the libraries for basic algorithms, and thus it is difficult to employ such languages to perform vector-related calculation.

3. Easy development and debugging

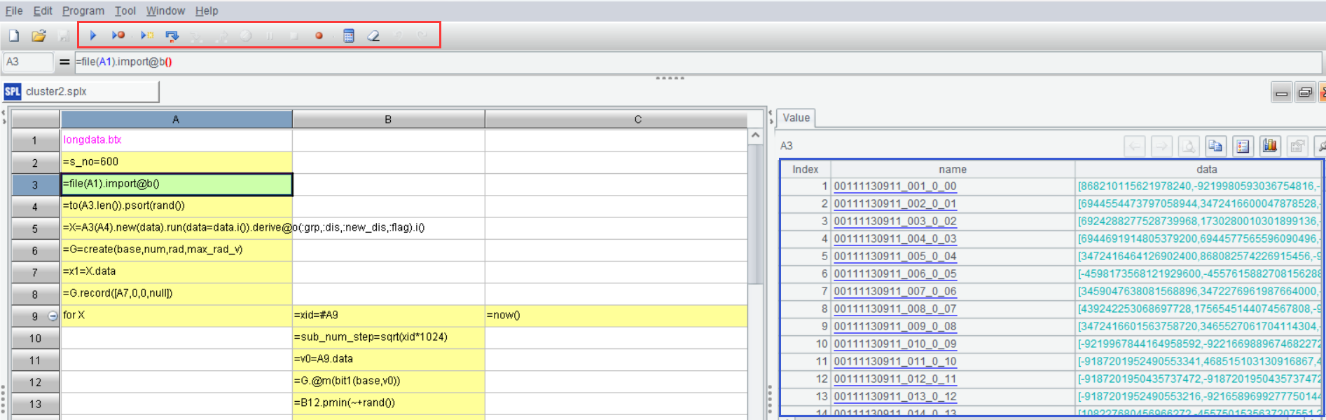

SPL is a grid-style programming language, which makes code very neat and intuitive. The calculation result of each cell will be saved and can be viewed through the result panel on the right side of IDE. By clicking on a cell, programmers can view the calculation result of that step (cell) in real time, making it clear whether the calculation is correct or not (eliminate the annoyance of using the print method of other programs). In addition, SPL also provides very convenient debugging functions, including run, debug, run to cursor, step in, set breakpoints, calculate current cell, etc., which can fully meet the needs of editing and debugging program. The following figure is the IDE window of SPL:

The red box contains the debugging buttons; the blue box is the result panel; the figure shows the calculation result of A3.

4. More than vector calculation

Searching for similar vector is not a standalone task and usually also involves some additional works, such as associating other structured data, processing other text data. SPL has its own unique understanding in this regard; using SPL to handle such works is not only simple but very efficient. For more information on this, please refer to the relevant documentation at Raqforum. General vector databases, by contrast, do not have the ability to handle such works and still need to rely on host language, or even need to resort to relational database sometimes, which is too troublesome.

5. Storage and high performance

SPL has complete storage ability and can implement storage tasks that are conducive to efficient query based on specific data requirements. In order to fully ensure the computing performance, SPL offers the specialized binary file storage format and many mechanisms, such as compression, columnar storage, ordering, and parallel segmentation. The storage of the said binary file is very flexible, which makes it possible to design a storage scheme based on any algorithm, allowing us not only to utilize the advantages of file storage itself but also to adjust based on algorithm, so it is not surprising to achieve high performance.

When it comes to high performance, the programming language used should, at least, be convenient to implement parallel processing. Unfortunately, it is difficult to implement in Python due to the existence of Global Interpreter Lock. The parallel processing of Python is fake and implemented through multiple processes in fact, which will incur much higher cost than multiple threads. SPL provides complete parallel processing ability, and its coding way for multiple threads is similar to that for single thread in many cases, except the addition of @m option. For example, calculate the Euclidean distance between 100 vectors and 1 vector:

| A | |

|---|---|

| 1 | =100.(10.(rand())) |

| 2 | =10.(rand()) |

| 3 | =A1.(dis(~,A2)) |

| 4 | =A1.@m(dis(~,A2)) |

A3 is the code of single thread; A4 is the code of multiple threads. Although the difference between the two is only @m, the computing efficiency is improved several times.

SPL practice: search high-dimensional binary vectors (‘Practice’ for short) is a recent case where we use SPL to search for similar vector. It is not difficult to see from the practice that searching high-dimensional vectors is highly dependent on specific scenarios, which is reflected in the following two aspects. First of all, it is difficult to choose a proper clustering algorithm. The commonly used k-means algorithm needs to determine the parameter k, yet the k value cannot be determined in this practice. Moreover, the clustering process also involves moving the center of mass, but the center of mass of binary vector is not easy to define. In order to create a more efficient index, we had to customize a clustering algorithm specific to the data of this practice - split type clustering and stepwise clustering. The second is the choice of similarity evaluation methods. The most commonly used method in the industry is the cosine similarity, but for the binary vectors in this practice, this method would go against our intuitive feelings. For example, when the number of dimension value 1 is large, and only a few dimensions are different, the calculated cos similarity will be very large; on the contrary, when the number of dimension value 0 is large, and only a few dimensions are different, the calculated cos similarity will be much smaller. Therefore, we choose to use XOR distance to evaluate the similarity so as to make the calculated result more in line with our intuition and, this method brings another benefit, that is, reduce the computation amount.

The whole process of the practice perfectly demonstrates the convenience of programming in SPL. In terms of basic algorithms, SPL offers the bits()function. This function can convert the binary sequence to long integer (long) stored as bits, which is precisely a step that turns computation into bitwise operation, greatly improving the computing efficiency (and the efficiency of index creation and vector comparison processes); Using the bit1() function to calculate XOR distance between two vectors further improves the computing efficiency.

In addition to the basic algorithms, SPL also easily implements two clustering algorithms - split type clustering and stepwise clustering. Although the logics of both clustering algorithms are still relatively complex, the core code in SPL only occupies a dozen cells. If C++ or Java was used to implement such algorithms, it would probably require hundreds or even thousands of lines of code.

With respect to the high performance, the practice utilizes SPL’s efficient algorithms, such as group@n(), where the @n option means grouping by sequence number; this algorithm is much faster than common hash grouping. In fact, SPL provides many other efficient algorithms, including the binary search, ordered computation, etc., and these algorithms are also easy to use and generally only require the addition of the @b or @o option.

In terms of parallel processing, multiple steps during clustering will involve parallel processing. SPL can carry out parallel processing by simply adding the @m option to common functions (such as group@nm()), thereby making full use of the computing resources of multi-core CPU. By contrast, Python lacks such ability, so Python is not as easy to use as imagined.

Although big data is not involved in this practice, it is not a problem for SPL to handle big data operation. SPL boasts complete cursor mechanism. By means of this mechanism, along with the efficient storage scheme, we can easily write efficient program for vector calculation.

The most intuitive feeling we gained from the practice is that the process of searching for similar vector is not as simple as imagined, and usually cannot be implemented by permutation and combination of the algorithm APIs provided by vector database (actually, even the permutation and combination of APIs requires a considerable amount of statistical and mathematical knowledge, and can only be achieved after trial and error). In this case, using SPL may be a better choice.

When deploying, general vector databases are usually heavy, making it complicated to deploy, debug, and maintain and, some vector databases can be used only on cloud, yet the data in many scenarios cannot be transferred to cloud due to security reasons. In comparison, SPL is very light and simpler to deploy, debug and maintain, and can even be embedded in an application, hereby making it possible to utilize the vector calculation ability of SPL anywhere.

Currently, SPL is not rich enough in the completeness of clustering algorithms. However, as SPL continues to supplement the basic algorithms, SPL will be more like a vector database than existing vector databases.

SPL Official Website 👉 https://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProc_SPL

SPL Learning Material 👉 https://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/cFTcUNs7

Youtube 👉 https://www.youtube.com/@esProc_SPL

Chinese version