SPL: Accessing Cloud Object Storage

SPL has implemented a set of functions for various cloud object storage (Amazon S3, Alibaba Cloud OSS, Google GCS, Microsoft Azure), which can read and write data on the cloud as easily as reading and writing local data files. This makes it easy to apply SPL's flexible computing power to cloud data. Let's take Amazon S3 as an example to understand its detailed usage.

Amazon S3

Create/Close Connection

The API for reading and writing S3 in Java requires four parameters, access key, secret key, region, endpoint; Passing them in SPL creates an S3 connection:

s3_open(accessKey : secretKey : region : endpoint);

After using the S3 connection, use s3_close (s3Conn) to close the connection.

Example: A1 creates a connection, and after performing some read/write and calculation operations, A3 closes the connection created by A1.

A |

|

1 |

=s3_open("AKIA5******MD7AFM2NB" :"us-west-1" :"https://s3.us-west-1.amazonaws.com") |

2 |

…… |

3 |

=s3_close(A1) |

Maintain buckets

There can be multiple buckets in S3, and using s3_bucket(s3Conn,bucket) can detect and maintain buckets.

Example:

A |

|

1 |

=s3_open(accessKey : secretKey : region : endpoint) |

2 |

=s3_bucket(A1,"spl-bucket-test1") |

3 |

=s3_bucket@c(A1,"spl-bucket-test1") |

4 |

=s3_bucket@d(A1,"spl-bucket-test1") |

5 |

=s3_close(A1) |

A2 checks for the existence of a bucket named 'spl-bucket-test1', returns true if it exists and false if it does not exist.

A3 uses the @c option to create a "spl-bucket-test1" bucket. If it is successfully created, it returns true. If it already exists or fails for other reasons, it returns false.

A4 uses the @d option to delete the "spl-bucket-test1" bucket. If the deletion is successful, it returns true. If it is not an empty bucket or fails for other reasons, it returns false.

List buckets/files

Using s3_list (s3Conn, [bucket]) can list all buckets or files under a specific bucket.

Example:

A |

|

1 |

=s3_open(accessKey : secretKey : region : endpoint) |

2 |

=s3_list(A1) |

3 |

=s3_list(A1,"spl-bucket-test1") |

4 |

=s3_close(A1) |

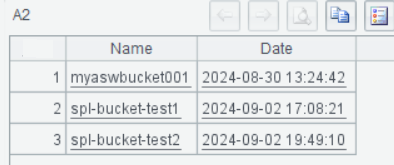

After execution, A2 lists all buckets:

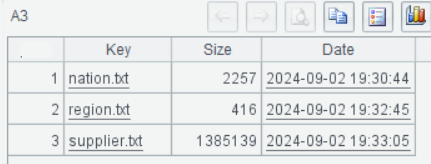

A3 lists the files under the "spl-bucket-test1" bucket:

Upload and download files

Using s3_copy(s3Conn, bucket : s3File, [localFile]), local files can be uploaded to S3, and S3 files can also be downloaded locally.

Example:

A |

|

1 |

=s3_open(accessKey : secretKey : region : endpoint) |

2 |

=s3_copy(A1, "spl-bucket-test1":"nation.txt","E:/nation2.txt") |

3 |

=s3_copy@u(A1, "spl-bucket-test1":"nation3.txt","E:/nation2.txt") |

4 |

=s3_close(A1) |

A2 downloads nation.txt from S3 as local E:/nation2.txt;

A3 uses the @u option to upload the local E:/nation2.txt file to the file nation3.txt on S3;

Load file data

The data of S3 file can be directly loaded using s3_file(s3Conn, bucketName, s3File [:charset]), which facilitates subsequent calculations.

Example:

A |

|

1 |

=s3_open(accessKey : secretKey : region : endpoint) |

2 |

=s3_file(A1, "spl-bucket-test1", "nation.txt") |

3 |

=A2.import@t() |

4 |

=A2.cursor@t().fetch(5) |

5 |

=A2.size() |

6 |

=A2.date() |

4 |

=s3_close(A1) |

A2 defines file object on S3;

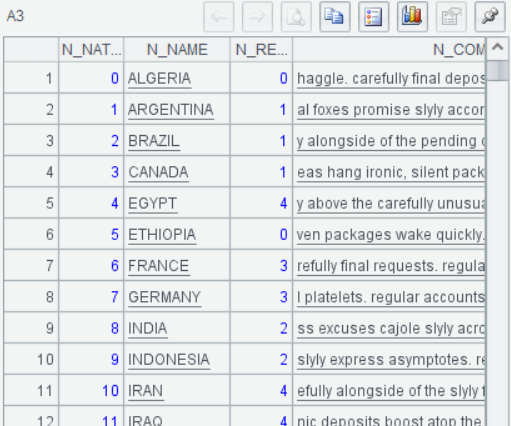

A3 loads all the data of A2 using the import() function:

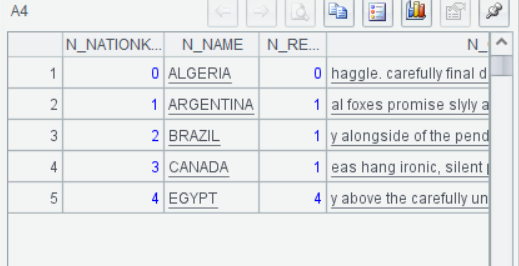

A4 uses the cursor() function to read file data in cursor mode and retrieve the first 5 rows:

A5 obtains file size; A6 obtains the last modification time of the file.

Alibaba Cloud OSS/Google GCS/Microsoft Azure

The operation functions for various cloud object storage are almost the same, only with different prefixes.

The functions of Alibaba Cloud OSS are prefixed with 'oss':

oss_open(), oss_close(), oss_bucket(), oss_list (), oss_copy(), oss_file();

The functions of Google GCS are prefixed with 'gcs':

gcs_open(), gcs_close(), gcs_bucket(), gcs_list (), gcs_copy(), gcs_file();

Microsoft Azure's functions are prefixed with'was':

was_open(), was_close(), was_bucket(), was_list (), was_copy(), was_file();

The only difference is that the functions for creating connections are slightly different. Here are four open functions for comparison:

s3_open(accessKey : secretKey : region : endpoint);

oss_open(AccessKey , SecretKey , url);

gcs_open(json) //The gcs official website will download a json file containing connection information, and here the json string from the file is passed in;

was_open(AccountName , AccountKey , EndpointSuffix , DefaultEndpointsProtocol);

The information required for these connections is detailed on each cloud official website and can be obtained in your own cloud account.

After creating the connection, data stored in various cloud object storage can be read, written, and calculated in a consistent manner in SPL.

SPL Official Website 👉 https://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProcSPL

SPL Learning Material 👉 https://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/2bkGwqTj

Youtube 👉 https://www.youtube.com/@esProc_SPL

Chinese Version